Microsoft’s Recall Under Fire: Privacy Concerns and Industry Response

Microsoft's Recall feature, a key component of its Copilot+ PCs, has sparked intense debate and raised serious privacy concerns. Designed as a "photographic memory" for your computer, Recall continuously takes screenshots and uses OCR to analyze content, allowing users to easily retrieve past information. However, the feature's potential for misuse and data leakage has led to strong reactions from security experts and industry professionals.

The initial announcement of Recall was met with immediate criticism, prompting Microsoft to postpone its release and implement security enhancements. These updates include requiring user permission during setup, encrypting database files with hardware-based TPM, and implementing a filter to prevent capturing sensitive information like payment details or passwords. Despite these changes, concerns persist.

One significant issue is the unreliability of the sensitive data filter. Testers have reported instances where confidential data slipped through and ended up in the OCR database. Furthermore, Recall meticulously logs interactions with other users, potentially violating their privacy and the data retention policies of messaging apps. Apps like Signal even had to resort to using DRM to prevent windows from being captured.

Signal has taken a bold step, blocking Windows Recall due to the lack of granular control offered to developers. They're using a "one weird trick" involving a DRM setting to protect copyrighted material, essentially repurposing it to prevent screenshots of Signal conversations. This highlights the need for Microsoft to provide proper developer tools and consider the privacy implications of AI-powered systems more carefully.

The security risks associated with Recall are amplified in scenarios where unauthorized individuals gain physical access to a computer or when attackers exploit Windows vulnerabilities for remote access. The potential for data exfiltration, even of encrypted data, remains a serious concern.

Performance and battery life are also affected by Recall. The continuous capture and processing of screenshots can consume significant memory and computing resources, particularly during gaming sessions or when the device is running on battery.

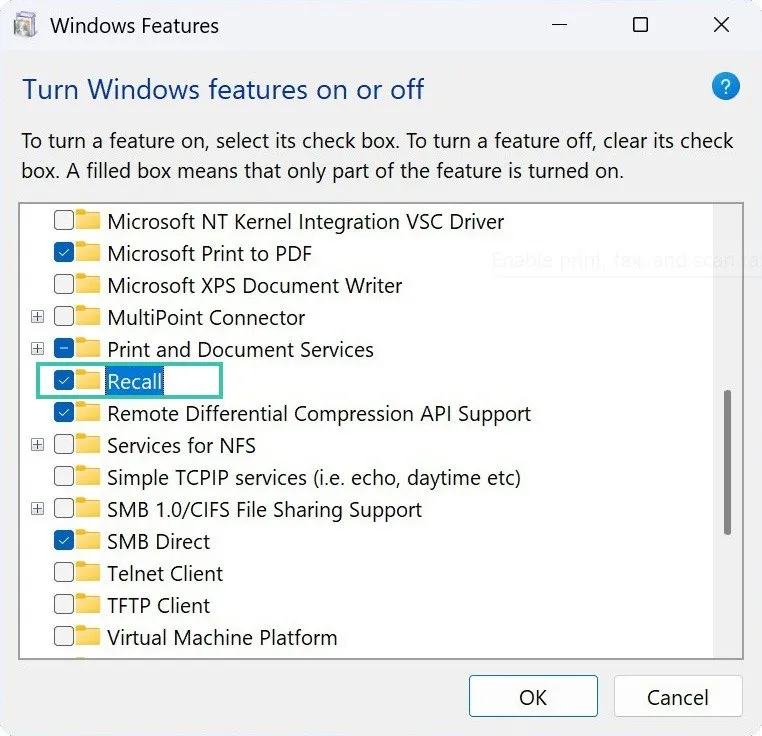

Many firms, including CPA firms, are opting to disable Recall on their devices despite Microsoft's security enhancements. Companies like Navolio & Tallman LLP and LBMC cite concerns about inadvertent storage of sensitive data, potential prompt injection attacks, insider threats, and compliance with data protection laws. While organizations can control their own devices, the risk from third parties who have Recall enabled remains a concern.

The indirect third-party risk is that anything shared with someone who has enabled it will be saved to their device, which could still result in data leakage and cyber incidents. Firms are emphasizing the need for open communication, employee education, and vigilance regarding data security, acknowledging the limits of controlling external parties.

Microsoft's Recall feature represents a bold step towards AI-powered productivity, but its inherent privacy and security risks cannot be ignored. The industry's response highlights the need for a balanced approach, where innovation is tempered by a strong commitment to user privacy and data protection. Ultimately, users face a critical decision: is the convenience of Recall worth the potential loss of control over their personal data?

What are your thoughts on Microsoft's Recall feature? Share your comments and concerns below!